Drawing the scene

Now to we need to implement the drawing function that is called in the render loop. The render loop is already implemented with some basic code. Again we'll come back later to the code already here, for now we will focus on the drawing function.

The drawing function

The drawing function is implemented in a lambda function in order to be used from multiple places: - the render loop - the render output image mode (for tests)

Similarly to VAO initialization, the drawing function will require many indirections. Our goal is to traverse the scene graph, which is composed of nodes.

The nodes can refer to a mesh or a camera. For now we are only interested in nodes that refer to meshes. We need to use the matrix of the node and its mesh to draw it on screen.

Each node can have children, that must be processed the same way. This recursive structure for the data naturally leads to a recursive function to implement. We will put it in a std::function that is already declared in the drawing function.

Right now your drawing function should look like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

// TODO ... because it is easier.

Replace // TODO Draw all nodes: implement a loop over the nodes of the scene (model.scenes[model.defaultScene].nodes) and call drawNode on each one. The parentMatrix for them should be the identify matrix glm::mat4(1).

Check compilation, run, commit and push your code.

Matrix reloaded

Before implementing the drawNode function, a reminder about matrices for 3D can be useful.

Most 3D objects are created by artists in a local space: the center of the scene is at the center of the object and axis are oriented in a coherent way relative to the object.

To put an object defined locally in a global 3D scene, at an arbitrary position, orientation and scale, we must apply a matrix to the coordinates of its vertices. This matrix is called the Model matrix of the object (also called localToWorld matrix, which is a better name in my opinion).

Each object of the global scene has its own Model matrix. If M denotes a model matrix and p_l a point in the local space, then p_w = M * p_l is the position of p in world space.

What is really of interest for us, however, is the view space, the space of the camera. To convert our points from world space to view space, we use the View matrix (also called worldToView matrix), which is computed from the parameters defining the camera (often with lookAt(eye, center, up) function).

Similarly, if V denotes the view matrix and p_w a point in world space, then p_v = V * p_w is the position of p in view space.

Finally, we have the Projection matrix than is used to project points on the screen (a few more operations are performed by the GPU before having real pixel coordinates).

We can combine all these matrices to define new ones that are directly used by our vertex shaders: - modelViewMatrix = view * model - modelViewProjectionMatrix = projection * modelViewMatrix

Another matrix must be computed to transform normals. I will not go into details, but normals need a special treatment in order to maintain the orthogonality with tangent vectors. The normalMatrix is defined by: - normalMatrix = transpose(inverse(mvMatrix));

These 3 matrices will need to be send to the GPU with glUniformMatrix4fv later.

The drawNode function

The part to replace in this section is // TODO The drawNode function

Now we can attack the drawNode function. The first step is to get the node (as a tinygltf::Node) and to compute its model matrix from the parent matrix. Fortunately for you, I included a helper function getLocalToWorldMatrix(node, parentMatrix). If you are interested you can take a look at the code, its mostly calls to glm function to perform the right maths.

In the drawNode function, get the node associated with the nodeIdx received as parameter. Create a glm::mat4 modelMatrix and initialize it with getLocalToWorldMatrix(node, parentMatrix).

Then we need to ensure that the node has a mesh:

Add an if statement to test if the node has a mesh (node.mesh >= 0).

Now in the body of the if statement:

Compute modelViewMatrix, modelViewProjectionMatrix, normalMatrix and send all of these to the shaders with glUniformMatrix4fv.

Next step is to get the mesh of the node and iterate over its primitives to draw them. To draw a primitive we need the VAO that we filled for it.

Remember that we computed a vector meshIndexToVaoRange with the range of vertex array objects for each mesh (this range being an offset and an number of elements in the vertexArrayObjects vector). Each primitive of index primIdx of the mesh should has its corresponding VAO at vertexArrayObjects[vaoRange.begin + primIdx] if vaoRange is the range of the mesh.

Get the mesh and the vertex array objects range of the current mesh.

Loop over the primitives of the mesh

Inside the loop body:

Get the VAO of the primitive (using vertexArrayObjects, the vaoRange and the primitive index) and bind it.

Get the current primitive.

Now we need to check if the primitive has indices by testing if (primitive.indices >= 0). If its the case we should use glDrawElements for the drawing, if not we should use glDrawArrays.

Implement the first case, where the primitive has indices. You need to get the accessor of the indices (model.accessors[primitive.indices]) and the bufferView to compute the total byte offset to use for indices. You should then call glDrawElements with the mode of the primitive, the number of indices (accessor.count), the component type of indices (accessor.componentType) and the byte offset as last argument (with a cast to const GLvoid*).

Implement the second case, where the primitive does not have indices. For this you need the number of vertex to render. The specification of glTF tells us that we can use the accessor of an arbritrary attribute of the primitive. We can use the first accesor we have with:

1 2 | |

glDrawArrays, passing it the mode of the primitive, 0 as second argument, and accessor.count as last argument.

We then have one last thing to implement, after the if (node.mesh >= 0) body: we need to draw children recursively.

After the if body, add a loop over node.children and call drawNode on each children. The matrix passed as second argument should be the modelMatrix that has been computed earlier in the function.

Check compilation, run, commit and push your code.

Interactive viewer

Now, if everything is OK in your code, you should be able to see something by running your program on a scene. My advice is to use the Sponza scene.

To run the program in viewer mode, you need to run in your terminal:

1 2 3 4 5 | |

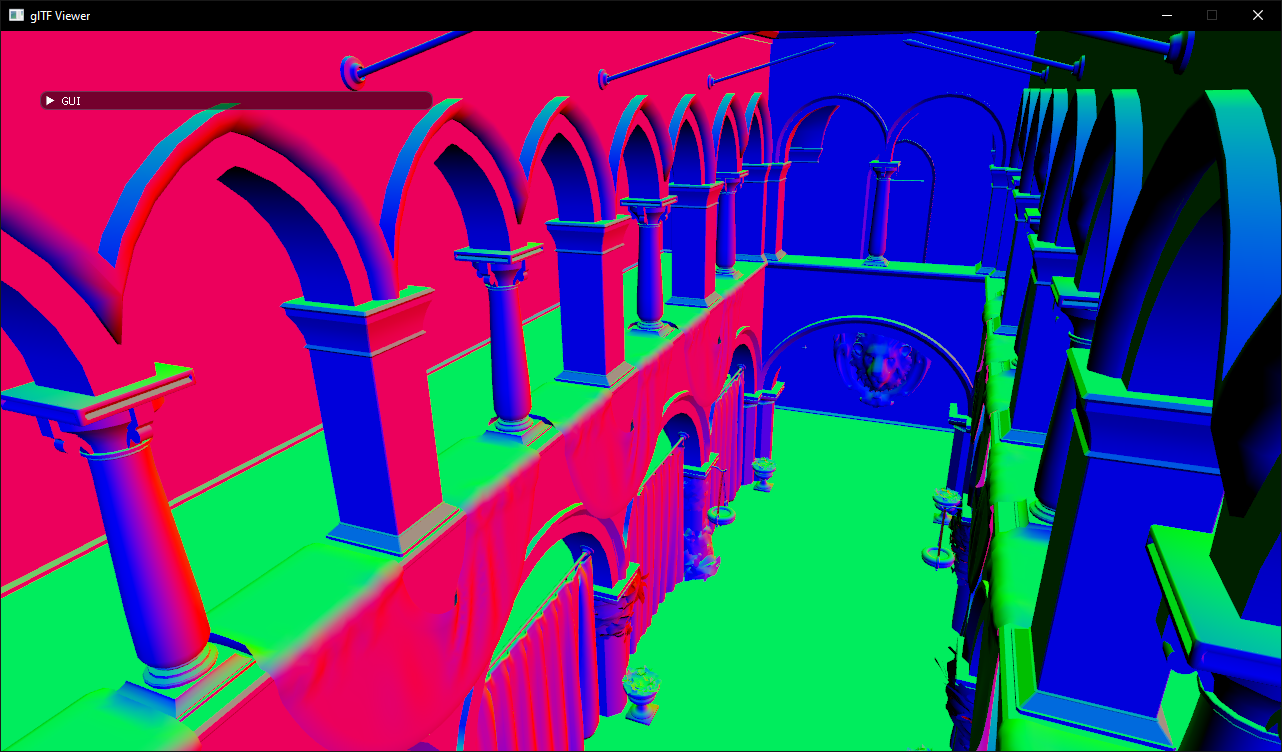

It should run the program and set a camera such that you see the following picture:

This is Sponza with normals displayed as colors ! A simple shader, but enough to see the 3D.

You can control the camera using classic First Perso control: ZQSD to move, the mouse to rotate, but also UP/DOWN arrows to go up or down and A/Z to turn your "head" left or right.

Render to image

For non regression unit tests, we will need our program to render to images (to be compared to reference images).

We will implement this feature in run() just before the render loop. If this mode is selected by the user (with --output PATH_TO_IMAGE passed on the command line), then the program should just draw the scene once and write the result in a png.

To implement this, I've written a function renderToImage in utils/images.cpp. Depending on the date you made your fork, you might have it or not.

Implement the --output functionnality

When the user put --output an_image.png on the command line, two things happen in the constructor of ViewerApplication:

- The window is not visible

- The m_OutputPath member variable is filled with the path of the output image, so it is not empty

We will use ther m_OutputPath variable to detect if the --output flag has been passed to the command line.

Just before the render loop, add an if statement to check if m_OutputPath is not empty.

The renderToImage function has the following prototype:

1 2 | |

So we need to allocate an array of unsigned char to store pixels. We will use 3 components (RGB image), meaning the total size of the array will be `width * height * numComponents*.

In the if body, create a std::vector<unsigned char> pixels with the good size, using the window dimensions for the image size.

Note that the gltf-viewer application optionally take on the command line --width and --height arguments to set the window size, or the image size in case of --output

It also takes a function to render the scene. Take note here that this function does not take any arguments. The functions drawScene we implemented earlier take a camera as argument. It means we cannot pass it directly to renderToImage, we have to put it in a function that takes no argument. A lambda is ideal for that. The call would look like:

1 2 3 | |

I don't want to go into too much details about lambdas (search google for tutorials, lambas are so great !), but the [&] means we "capture" all variables from the current scope by reference. It means we can access variables that are defined outside of the lambda. In particular, we can access cameraController and drawScene.

Add the previous call to renderToImage

We need two more things: flip the image vertically, because OpenGL does not use the same convention for that than png files, and write the png file with stb_image_write library which is included in the third-parties.

Check the prototype of flipImageYAxis, defined in images.hpp and add a call to it

Add the following code to output the png:

1 2 3 | |

Finally at the end of the if statement, returns 0. So in that mode, our application just render an image in a file and leave.